티스토리 뷰

https://arxiv.org/pdf/2011.14204.pdf

---

Proposed by Amazon Alexa Natural Understanding Team

Limitations of conventional class-aware detection

- Hinders the adoption of trained detectors in real-world applications due to the added cost of retraining them for application specific object types

- such detectors cannot be used in applications like obstacle detection, where it is more important to determine the location of all objects present in the scene than to find specific kinds of objects

Class-agnostic object detection

- predicting bounding boxes for all objects present in an image irrespective of their object-types

- detect objects of unknown types, which are not present or annotated in the training data

Contribution

- A novel class-agnostic object detection problem formulation as a new research direction

- Training and evaluation protocols for benchmarking and advancing research

- A new adversarial learning framework for classagnostic detection that penalizes the model if objecttype is encoded in embeddings used for predictions.

Relative Works

- Two-stage methods for class-agnostic detection could employ these works to generate intermediate object proposals

- based on edge-related features and objectness metrics

→ do not correspond directly to final detections and must be fed to a detector module to infer final detections - generating pixel-level objectness scores in order to segment object regions out of background content

- Mason: A model agnostic objectness framework

- Pixel objectness: learning to segment generic objects automatically in images and videos

- the efficacy of convolutional neural networks like AlexNet [21] for localizing objects irrespective of their classes

- also relative with few-shot & zero-shot learning which are targeted towards detecting objects of novel types

Architecture

- From Class-aware to Class-agnostic Detection

- the goal of this task is to predict bounding boxes for all objects present in an image but not their category

- implicit goal for models developed for this task is to generalize to objects of unknown types

- Agnostic requirements

- object retrieval from a large and diverse database

- recognition of application-specific object-types through training a downstream object recognition model (instead of retraining the full detection network)

- Baseline models

- Region proposal network of a two-stage detector

- Class-aware detector trained for object-type classification and bounding box regression

- pretrained class-aware model finetuned end-to-end for object-or-not binary classification instead of object type, along with bounding box regression

- detection model trained from scratch for object-or-not binary classification and bounding box regression.

Adversarial Learning Framework for Class-agnostic Object Detection

- Ignore type-distinguishing features so that they can better generalize to unseen object-types

- An adversarial fashion such that models are penalized for encoding features that contain object-type information

- adversarial discriminator branches that attempt to classify object-types

- from the features output by the upstream part of detection networks, and penalize the model training if they are successful

- Trained in an alternating manner such that the discriminators are frozen when the rest of the model is updated and vice versa

- Loss

- Use the standard categorical cross-entropy loss in discriminators

- the cross-entropy loss for object-or-not classification

- smooth L1 loss for bounding box regression

- the negative entropy of discriminator predictions

- adversarial discriminator branches that attempt to classify object-types

- Model Instantiation

- can apply in both of 2-stage and 1-stage detector

- use MMDetection Framework

- Faster R-CNN

- multiclass object-type classification layer → a binary objector-not layer

- add adversarial discriminator on top of the feature extraction layer

- SSD (300)

- SSD models contain classification and regression layers for making predictions at each depth-level

- each object-type classification layer → a binary object-or-not layer

- attach an adversarial discriminator at each depth-level

Experiments

- measure for

- generalization to unseen object types

- the downstream utility of trained models

- Generalization to Unseen Object-types

- use average recall (AR) at various number (k ∈ {3, 5, 10, 20, 30, 100, 300, 1000}) of allowed bounding box predictions

- Exp1

- train on VOC07+12 → 17 seen classes, 3 unseen classes

- use F1 scores to determine easy, medium, and hard classes

- VOC model → coco validataion set

- Results

- FRCNN

- Agnostic >>> Aware in Unseen class

- margin in recall becomes larger as the difficulty of generalizability is increased

- Results on the COCO unseen classes show that the adversarial model performs the best overall

- SSD

- Agnostic >>> Aware

- Adversarial model performs the best overall

- some reduction in Seen Set

- results on COCO show consistent improvements from adversarial learning, especially for medium and large sized objects

- FRCNN

- Exp2

- train on COCO 2017 train set → evaluate on nonoverlapping classes in the Open Image V6 test set

- Result

- Class-agnostic models generalize better than class-aware models with those trained adversarially from scratch performing the best overall

- adversarially trained (from scratch) models perform the best in both setting

- finetuned from the pretrained class-aware baselines

- worse than those that are trained from scratch

- difficulty of unlearning discriminative features for object-type classification and realigning to learn type-agnostic features

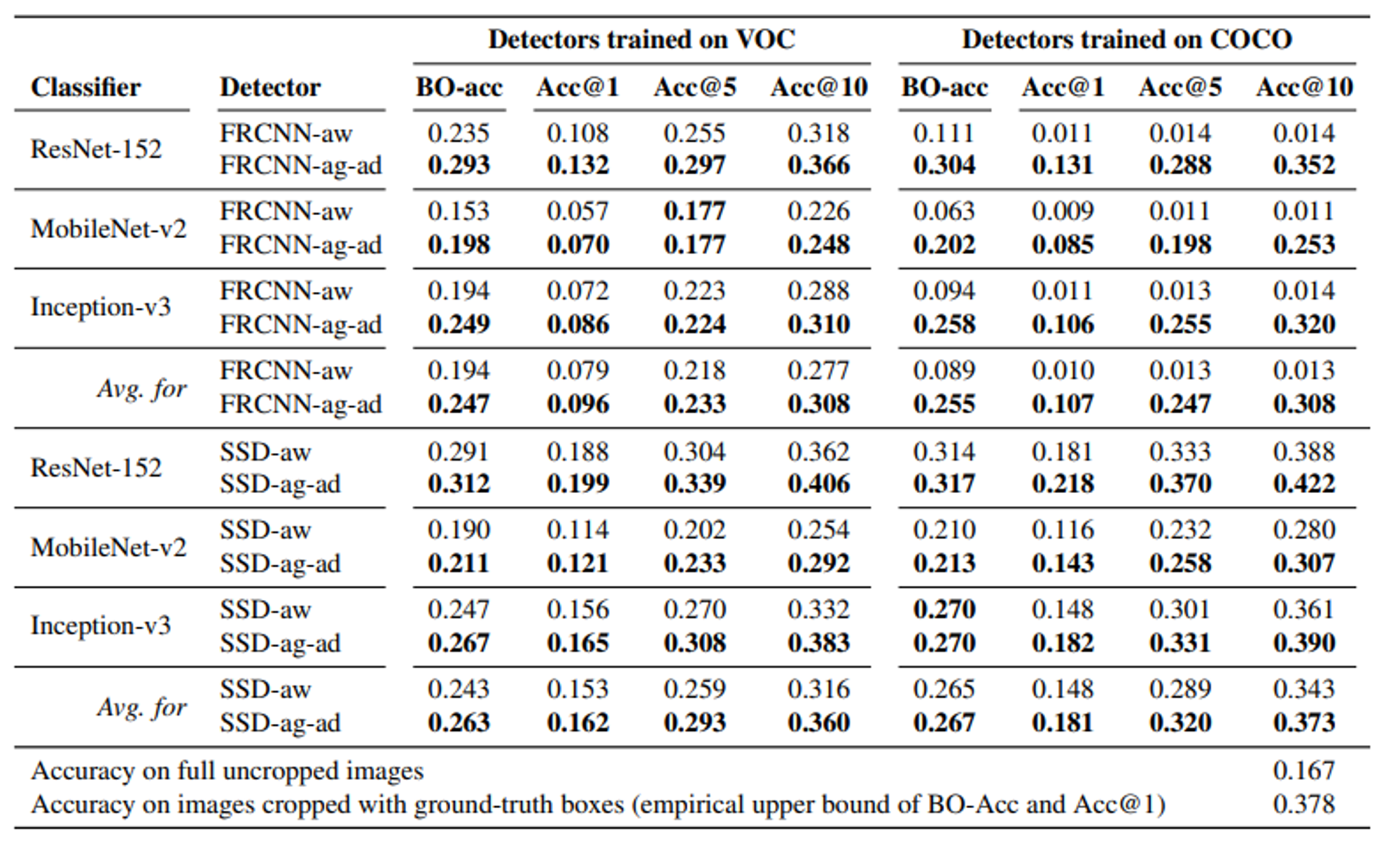

- Downstream Utility

- widespread downstream utilization of class agnostic bounding boxes for extracting objects in images and using them for various visual reasoning tasks

- predict M bounding boxes for each image, which are then use to crop the image into M versions

- ImageNet classifiers are then used to predict the object class from the cropped images

- BO - highest intersection over union (IoU) with the ground-truth

- ACC@M - the classifier’s prediction on at least one of the M crops needs to be correct

- voc → 20 class / objnet → 300 class

- results

- the adversarially trained class-agnostic models perform better than the baselines in general on both Accuracy@M and Bestoverlap accuracy

Conclusion

- The formulated a novel task of class-agnostic object detection

- Present training and evaluation protocols for benchmarking models

- generalization to new object-types

- downstream utility in terms of object recognition

- presented a few intuitive baselines and proposed a new adversarially trained model that penalizes the objective if the learned representations encode object-type

For us

- Limitation of AR

- not consider Precision

- need many proposal

- Agnostic - easy to apply : use binary cross entropy loss

- Adversarial - more effective when there’s a lot of unseen datas

- Idea

- Learn background feature

- similiar environments

- Learn background feature

'Paper Review' 카테고리의 다른 글

댓글